1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

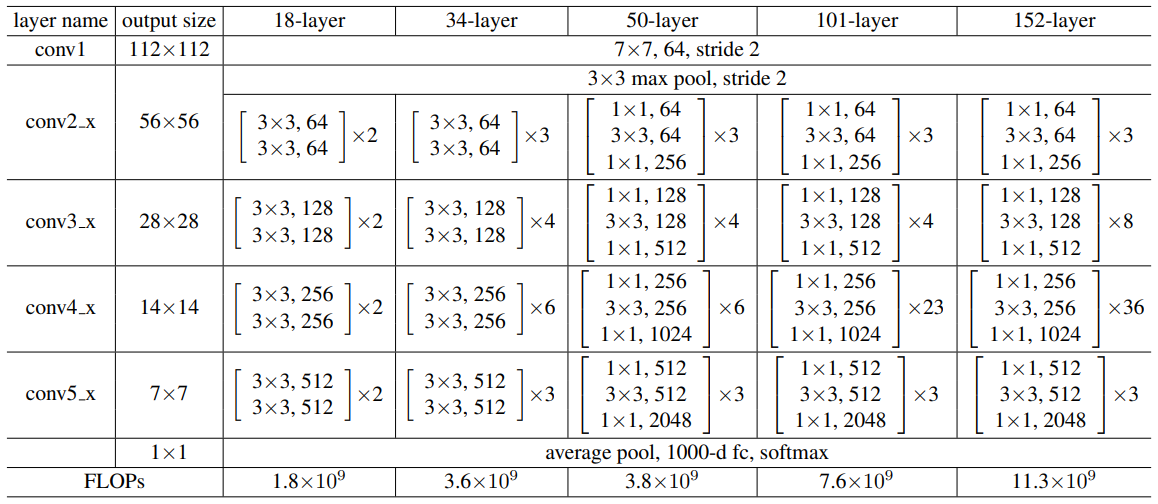

| class ResNet(nn.Layer):

def __init__(self, in_channels=3, n_classes=2, mtype=50):

'''

* `in_channels`: 输入的通道数

* `n_classes`: 输出分类数量

* `mtype`: ResNet类型(18/34/50/101/152)

'''

super(ResNet, self).__init__()

if mtype == 18:

self.Block, n_blocks = BasicBlock, [2, 2, 2, 2]

elif mtype == 34:

self.Block, n_blocks = BasicBlock, [3, 4, 6, 3]

elif mtype == 50:

self.Block, n_blocks = Bottleneck, [3, 4, 6, 3]

elif mtype == 101:

self.Block, n_blocks = Bottleneck, [3, 4, 23, 3]

elif mtype == 152:

self.Block, n_blocks = Bottleneck, [3, 8, 36, 3]

else:

raise NotImplementedError("`mtype` must in [18, 34, 50, 101, 152]")

self.e = self.Block.expansion

self.conv1 = ConvBN2d(in_channels, 64, 7, 2, 3, "relu")

self.pool1 = nn.MaxPool2D(3, 2, 1)

self.conv2 = self._res_blocks(n_blocks[0], 64, 64, 1)

self.conv3 = self._res_blocks(n_blocks[1], 64 * self.e, 128, 2)

self.conv4 = self._res_blocks(n_blocks[2], 128 * self.e, 256, 2)

self.conv5 = self._res_blocks(n_blocks[3], 256 * self.e, 512, 2)

self.pool2 = nn.AdaptiveAvgPool2D((1, 1))

self.linear = nn.Sequential(nn.Flatten(1, -1),

nn.Linear(512 * self.e, n_classes))

def forward(self, x):

x = self.conv1(x)

x = self.pool1(x)

x = self.conv2(x)

x = self.conv3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool2(x)

y = self.linear(x)

return y

def _res_blocks(self, n_block, in_size, out_size, stride):

'''

* `n_block`: 残差块的数量

* `in_size`: 第一层卷积层的输入通道数

* `out_size`: 第一层卷积层的输出通道数

* `stride`: 第一个残差块卷积运算的步长

'''

blocks = [self.Block(in_size, out_size, stride),]

in_size = out_size * self.e

for _ in range(1, n_block):

blocks.append( self.Block(in_size, out_size, stride=1) )

return nn.Sequential(*blocks)

|